by Chris Wrobel

•

Inside the Mind of My Digital Clone: A Conversation with My AI Avatar

You can listen to my cloned voice reading this blog post, by clicking here:

Intro

You've probably already heard of ChatGPT and how it's impacting all areas of our professional and private lives. This again started the discussion that the future of UI is simply... NO UI. Well, that's exactly the vision we had in mind when we started the company in 2019. We know that speaking with someone is simply the most natural and efficient thing that we do. We are a virtual beings company, so we've tasked ourselves with exploring how realistic can a virtual being become with state-of-the-art AI technology.

To do that, we used the following AI tools to create my digital double.

- First, we made a scan of my face with a Polycam app and used it to create a 3D model with Unreal Metahuman

- Secondly, I cloned my voice with Azure Speech Services

- Then, we integrated ChatGPT as a conversational engine

- And finally, we glued it all together using our Virbe platform and our Unreal Engine SDK.

Here's a step-by-step walkthrough of all the areas.

Mesh To Metahuman

To bring my likeness to life in the virtual world, we opted for the cutting-edge Polycam app. With its advanced technology, we were able to create a stunning 3D scan of my head. To take things up a notch, we then exported the model as an FBX file complete with textures, making it a breeze to import into Unreal Engine for further refinement and customization.

Unreal Engine has greatly detailed documentation on the Mesh To Metahuman process, so head out there to check the whole process: https://docs.metahuman.unrealengine.com/en-US/mesh-to-metahuman-in-unreal-engine/ Heads up! We discovered a neat trick to save you some time and frustration when scanning for Metahuman Mesh. Just pop on a swimming cap before you start, and you’ll avoid any hair-related mishaps during the import process. Trust us, we learned the hard way and had to spend hours fixing our final result. Don’t make the same mistake we did!

Here's the final result we got in our Metahuman Creator Dashboard:

There are numerous customization options on clothing, lighting, and animation clips, so feel free to give Metahuman Creator a try yourself.

Voice Cloning with Azure Cloud

Voice Cloning has become a hot topic lately, with a number of solutions available to choose from. From Resemble AI to Descript, NVIDIA Riva, and Azure Custom Neural Voices, the options are endless. However, Microsoft takes a cautious approach to the matter, requiring early access approval and verbal consent from the person whose voice you intend to clone. The process itself is quite intricate, involving the creation of a dataset comprising over 300 audio clips with corresponding transcriptions. To facilitate this, our engineering team developed a nifty web tool called “Virbe Voice Recorded,” which I personally used to record my voice samples for over an hour using a Blue Yeti X microphone.

Once you have a necessary dataset you can continue the process in Azure Speech Studio First, you import the necessary audio clips, and after you've done reviewing and cleaning the dataset from low-quality samples you can continue with the training.

It is possible to replicate a voice in a variety of languages, including English, Polish, German, Japanese, Spanish, and more. For comprehensive assistance, please refer to the documentation available at: https://learn.microsoft.com/en-us/azure/cognitive-services/speech-service/language-support?tabs=tts. It is noteworthy that cross-lingual training can also be conducted by utilizing samples from a primary language. In my case, I have also trained my voice in Japanese, which will be showcased in a forthcoming blog post.

The training process typically takes between 10-20 hours, depending on the settings, and incurs a cost of approximately $1000. Upon completion of the training, a customized voice model can be deployed at a cost of approximately $4 per hour: https://azure.microsoft.com/en-us/pricing/details/cognitive-services/speech-services/. However, this is a nominal fee, particularly for individuals pursuing a career in the voice-over or movie dubbing, where the hourly rate is likely to be significantly higher. Here's the audio sample of me reading this blog post.

ChatGPT integration into Virbe Platform

Our platform has always been at the forefront of conversational technology, and we are constantly striving to improve our offerings. That’s why we are excited to announce that we have expanded our support for OpenAI GPT, one of the most advanced conversational engines available today. Not only do we continue to support the existing version of OpenAI GPT, but we have also added support for the Azure-hosted models, giving our users even more options to choose from. With these new additions, our platform is now even more versatile and capable of handling a wider range of conversational scenarios. Whether you’re looking to build a chatbot for customer service, sales, or any other purpose, our platform has you covered.

To conduct our experiment, we opted for the Azure-hosted version of the software. This version typically has a faster response time compared to the OpenAI version. This is likely because you can launch it in your preferred region and subscription. However, to gain access to this version, you must apply and get accepted. For more information on how to apply, please visit the website: https://azure.microsoft.com/en-us/products/cognitive-services/openai-service

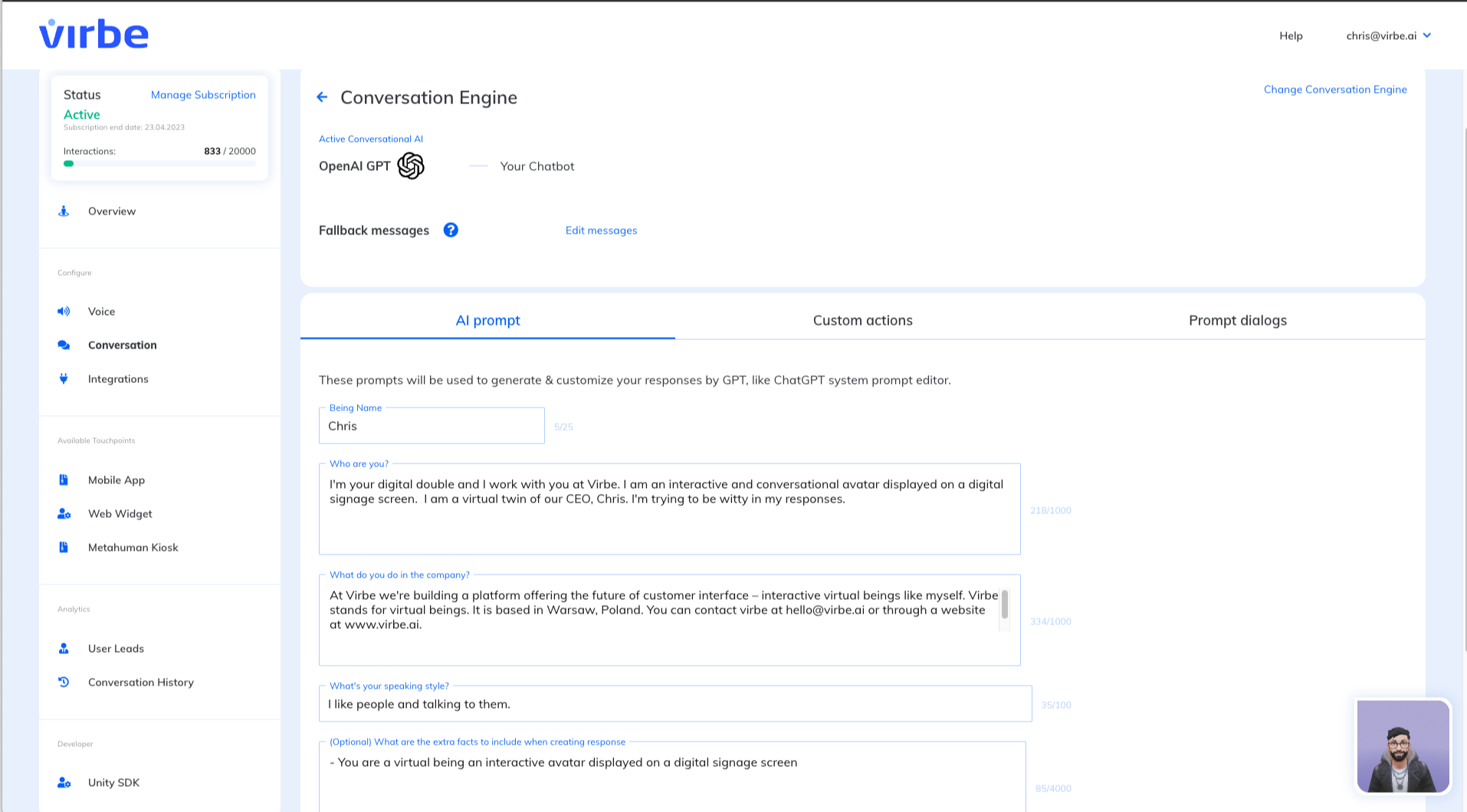

Are you looking to add a personal touch to your virtual assistant? Simply adding a model isn’t enough - you need to teach your virtual being its personality, role, and about your business. Luckily, with Virbe Dashboard, this process is a breeze. Take control of your virtual assistant’s development and watch as it becomes an integral part of your team. Don’t settle for a generic virtual assistant - customize and personalize with Virbe.

Our team has been working tirelessly to create a GPT engine wrapper that goes above and beyond its default capabilities. We’re proud to say that we’ve succeeded in developing an exceptional tool that adapts to the conversation’s context, incorporates short-term memory, and delivers structured responses like images, cards, and forms. With our innovative approach, we’re able to provide a more personalized and engaging experience for users. So, whether you’re looking to improve customer service or enhance your chatbot’s capabilities, our GPT engine wrapper is the perfect solution.

GPT integration is already available so feel free to create your account in our Virbe Hub: https://hub.virbe.app/

Integrating Metahuman with Virbe Unreal Engine SDK

Exciting news for game and app developers! The Unreal Engine SDK, which allows for dynamic behavior, is currently only available to our trusted partners. However, we plan to release it to the public later this year. In the meantime, configuring your Metahuman with the Virbe platform is a breeze. Simply use our Wizard tool to select the appropriate actor in the scene and you’re good to go!

Finally, we’ve reached the last milestone - my digital copy has been flawlessly integrated into our Metahuman Kiosk solution. With the help of our cutting-edge technology, including Voice Activity Detection and Presence Detection using an RGB Camera, I was able to have a truly authentic conversation with my digital self. No more one-sided conversations with a mirror - now a mirror can talk back to you! Check out our documentation to learn more: https://docs.virbe.ai/digital-signage/kiosk-apps/metahuman-kiosk

Remarks

Obviously, there is still room for improvement.

- First, the voice. It turns out that the quality of your voice samples determines the final result. The engine itself doesn't improve your tone, intonation, or speaking style.

- ChatGPT takes some time to respond and adds latency. It's less annoying when it's within a messaging interface, but when having a real-time conversation, you can feel it.

- Speaking of latency fast internet connection is crucial.

- Finally, to run such a realistic virtual being you need a desktop with a pretty decent GPU rather than just a browser. That's why we currently recommend it as a digital signage solution.

On a positive note, the above technologies change so rapidly nowadays, that it's probably a matter of months if not weeks until we see significant improvements in those areas. We will make sure to keep our Virbe Platform up-to-date with recent developments.

Agencies & developers - let's partner up

Virbe Platform is publicly available, and if you're a Product, Chatbot, or Marketing Agency we have a dedicated partner program for you. Head out to virbe.ai/partners to learn more or join our Discord.

Are you an investor?

Finally, we're a startup and currently looking for fundraising, so if you are a seed-stage investor with a focus on revolutionizing offline and online customer experience, let us know at [email protected]